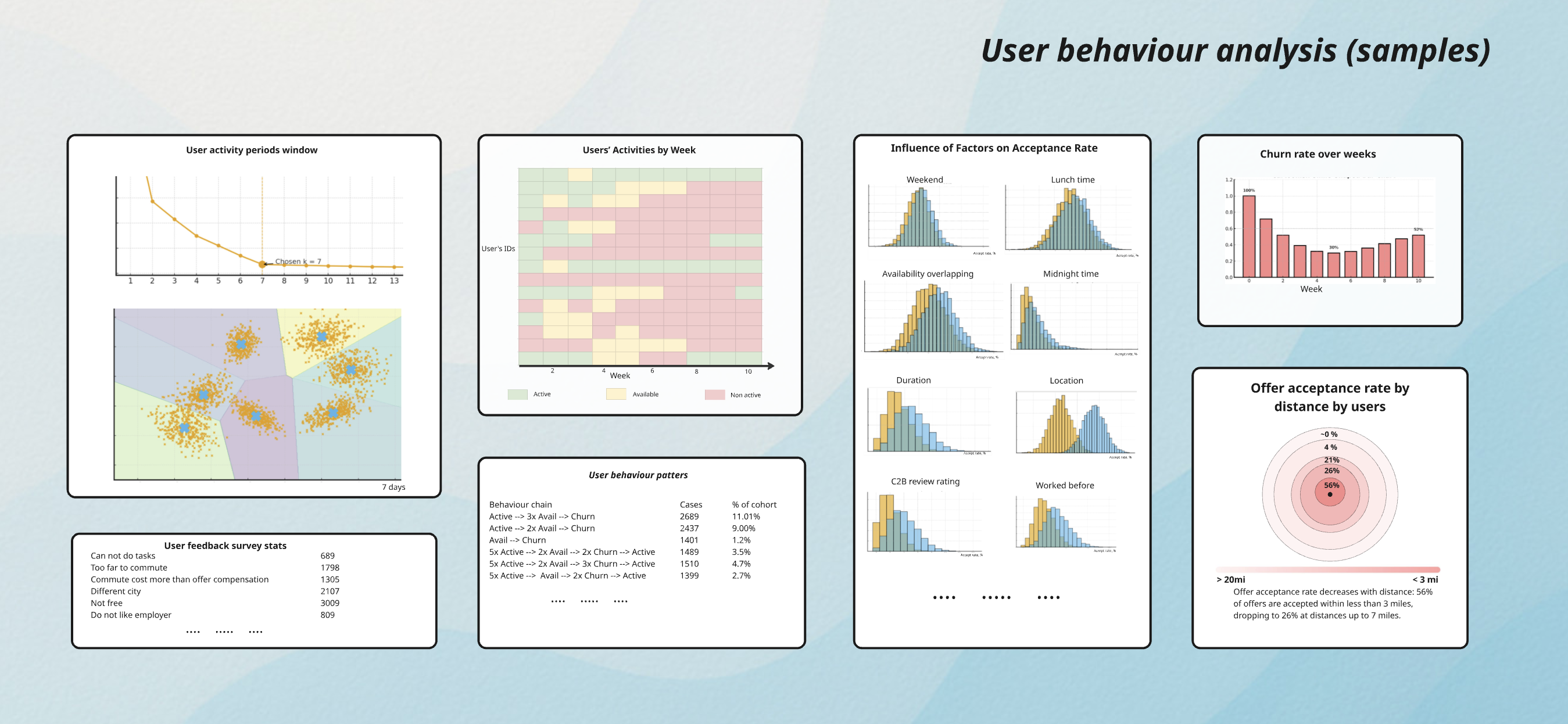

The project began with a deep analysis of user behavior. I segmented users into three main categories based on their activity patterns on the platform and defined appropriate activity windows (e.g., 1-day, 4-day, or weekly) to capture engagement dynamics and distinguish active from inactive users. This allowed me to identify the key drivers of engagement, uncover early signals of churn, and calculate critical business metrics such as LTV, churn, and retention rates. I also analyzed seasonal behavior to better understand demand cycles and user availability (Pic. 1).

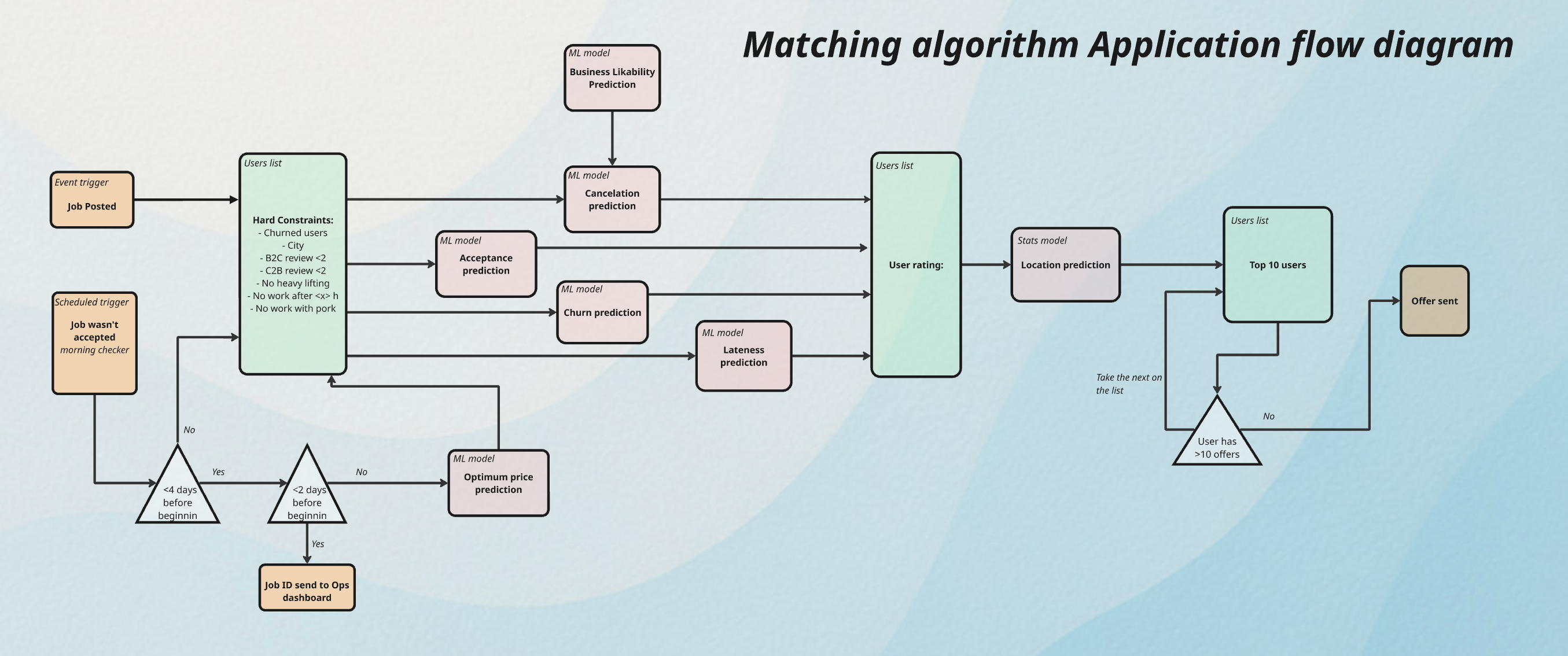

Next, I designed the matching algorithm with AI-driven elements to optimize how candidates were connected to job offers. The algorithm searched through the user base to find the best matches while applying a constraint of no more than 10 active offers per user. This ensured fairness by limiting bias toward the most active candidates and reduced churn risk among users with negative historical experiences.

Hard filters were applied upfront, while positive behavior and reliability were integrated through machine learning models that scored and ranked users.

To further increase efficiency, I implemented dynamic pricing for offers. This helped prevent non-attractive stints from remaining unfilled by adjusting pricing if an offer was unaccepted within 4 days of the start date.

On top of that, the Operations team was equipped with a dashboard to intervene when an offer remained unaccepted less than 48 hours before its start (Pic. 2).

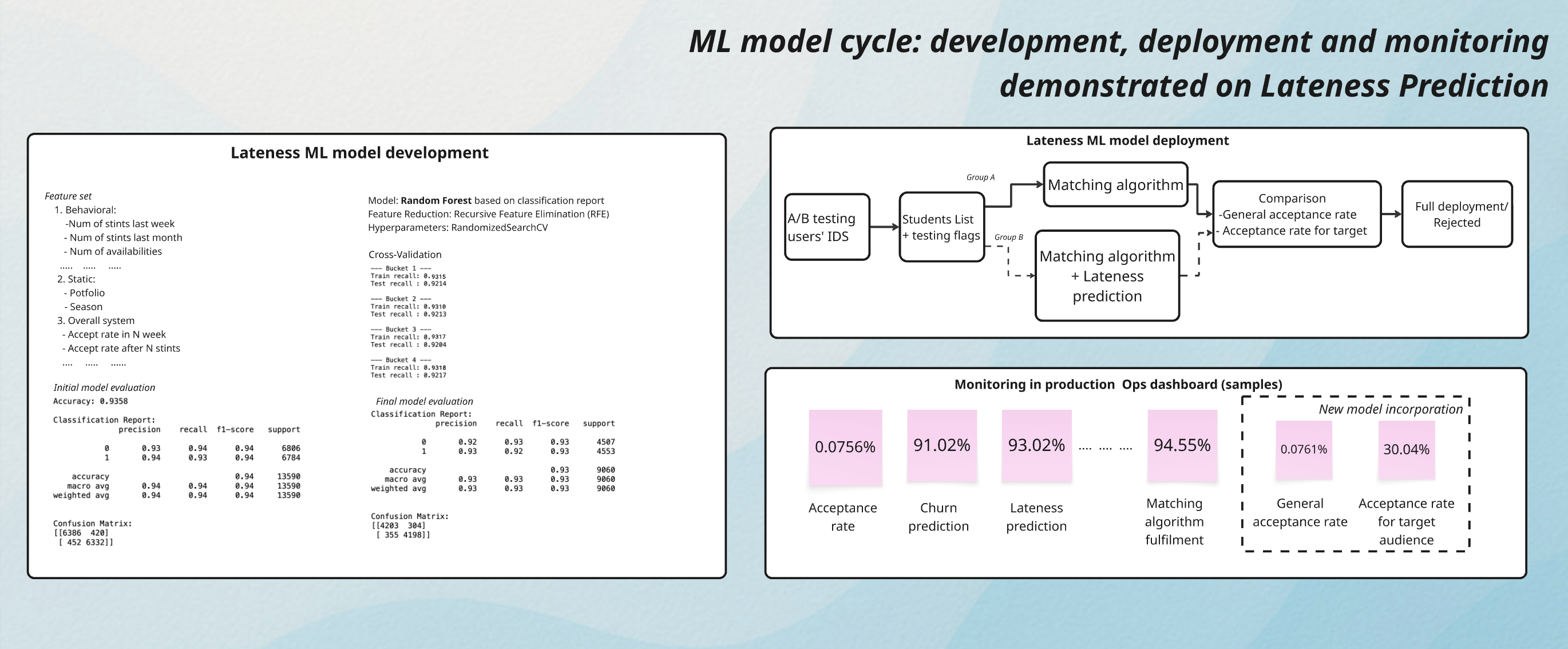

Finally, I established a structured machine learning development cycle, covering feature collection, model evaluation, robustness testing, and hyperparameter tuning. To ensure safe deployment, new models were validated through key metric comparisons before going live, followed by ongoing monitoring of performance in production (Pic. 3)

Stint

Lead Data Scientist

Dec 2020 — Apr 2022

%20(1).jpg)