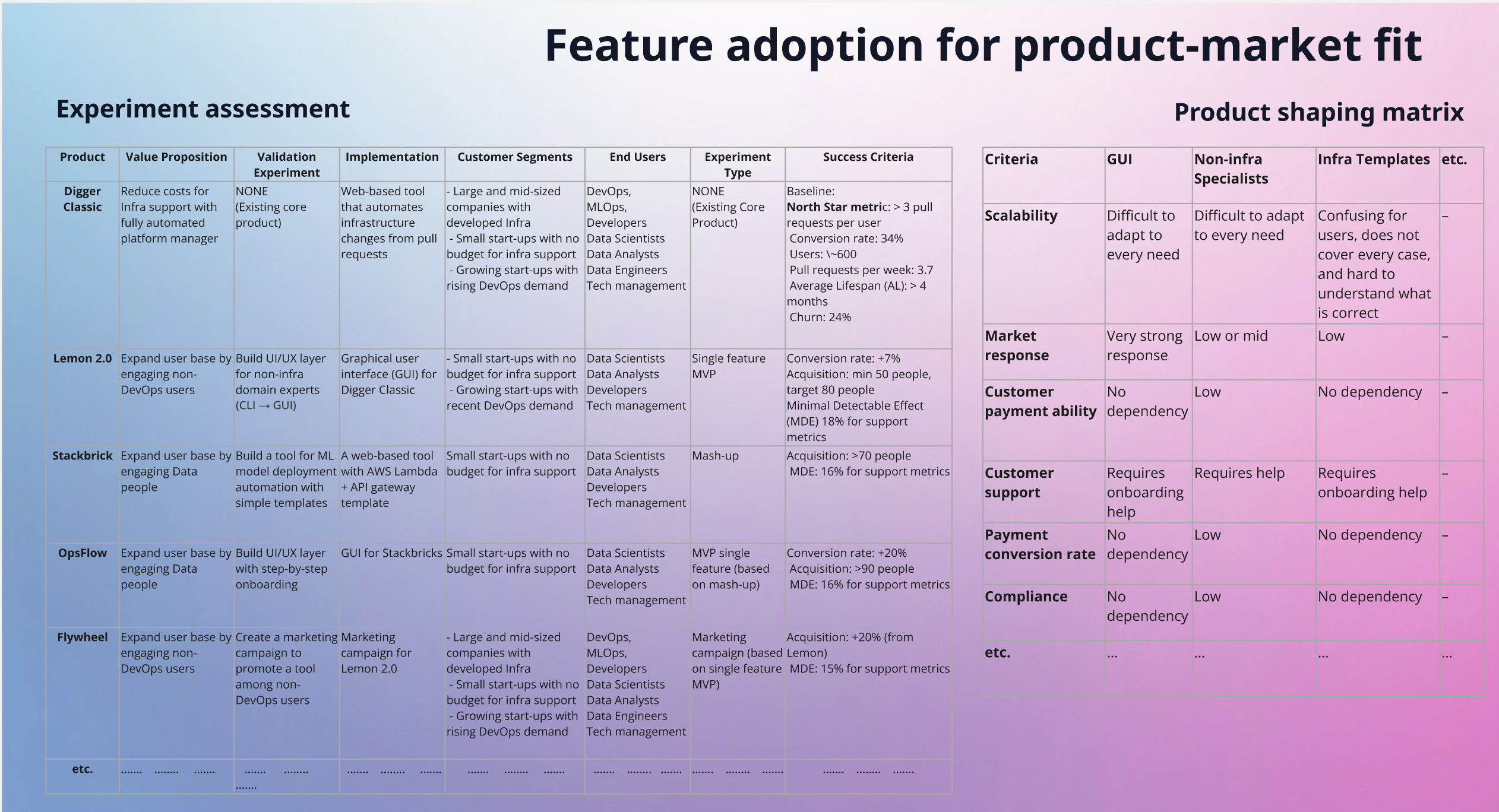

When I joined the company, experimentation was happening actively but without structure. I introduced a product experimentation framework to avoid overlapping hypotheses, capture insights systematically, and improve decision-making.

The experiment cycle consisted of four stages:

1. Idea Generation: Collecting and analyzing customer feedback (support tickets, interviews, forums), researching the market (competitor benchmarking, trend analysis), and conducting value estimation (TAM, SAM, SOM, customer payment readiness).

2. Preparation: Defining the hypothesis and success criteria, setting measurable metrics, building prototypes, and integrating analytics.

3. Validation: Running controlled launches (e.g., on ProductHunt), promoting the solution to target users, and monitoring adoption and engagement metrics.

4. Analysis and adaptation: Reviewing outcomes, adapting product direction, and making evidence-based decisions. (Pic. 1)

Although the framework was common, each experiment had: Unique research inputs, distinct user journeys and infrastructure implementations, and its own metrics for adoption and success.

Pic. 2 illustrates how the StackBricks experiment was launched.

By systematically assessing and documenting experiments I was able to reduced the number of live experiments by eliminating weak hypotheses early and standardized core product metrics and extrapolated them with high confidence for fundraising.

Through these experiments, I defined the acquisition strategy and identified the Ideal Customer Profile (ICP).

These insights were pivotal in achieving product-market fit, accelerating customer growth, and directly contributing to raising the next funding round ( Pic. 3)

Digger

Principal Data Scientist

March 2022 — Jan 2024